Jesse's 2025 TLA+ Community Event Notes

Here are my notes from the 2025 TLA+ Community Event. See also my colleague Murat Demirbas’s notes (he was one of the event’s organizers). The talks were all recorded, and the videos, slides, and abstracts are posted.

Some years there are TLA+ “conferences” co-located with industry conferences, and some years there are “community events” co-located with academic conferences. This year was a community event, the first TLA+ community event in North America. There were about 20 participants, evenly divided between academia and industry. I knew most of them already. If I recall correctly, the last TLA+ thing I attended was the 2021 conference; it had more participants, perhaps because it was co-located with the huge StrangeLoop industry conference.

ModelFuzz: Model guided fuzzing of distributed systems #

Srinidhi Nagendra, Max Planck Institute for Software Systems.

Srini summarized his paper, Model-guided Fuzzing of Distributed Systems. Srini’s ModelFuzz is coverage-guided fuzzing of the implementation, where “coverage” is defined by the model. ModelFuzz executes an implementation test (which executes a workload and occasionally crashes or restarts a server), captures an execution trace, maps the trace back to a sequence of TLA+ model states, and guides the next round of tests to maximize coverage of the TLA+ model states. For distributed systems, maximizing this sort of coverage is a more efficient way to discover bugs than e.g. branch coverage like AFL uses.

Srini’s example is a Raft spec and several Raft implementations. He records all messages among Raft nodes, as well as events like node crashes, and replays this execution on the model. The spec had to be slightly restructured to make it easier to map implementation actions to model actions. He also needed to “abstract states” (something like a symmetry set in TLA+ config) so that states which differ in uninteresting ways are not considered distinct states that improve coverage. E.g., if you crash the leader 1000 times, you’ll get 1000 terms without truly exploring new states. Choosing the right model abstraction is an art. I don’t understand why he reinvented this idea instead of just using TLA+’s existing symmetry sets or views.

ModelFuzz generates implementation tests in a really surprising way. Srini created a central proxy through which all Raft messages pass. ModelFuzz counts the number of messages that are delivered to each server, and then “mutates” a test by buffering messages in the proxy and changing the order in which the proxy delivers messages, as well as perturbing the schedule of server crashes. There is a lot of nondeterminism in the system already and ModelFuzz is jiggling it even more. Srini shows that mutating tests strategically will moderately improve model coverage, compared to just rerunning the test many times and relying on inherent nondeterminism to improve model coverage, and compared to purely random mutation of tests. The charts he showed us seemed to show a rather small improvement, but he found a lot of new bugs!

It’s interesting that Srini was able to explore more of the state space with an intervention that’s reasonably easy to implement. But I think Antithesis is better. Model-guided fuzzing is still useful, but I predict that Antithesis or other deterministic hypervisors will soon be the overwhelmingly dominant means of actually doing the fuzzing.

Automating Trace Validation with PGo #

Finn Hackett (presenting) and Ivan Beschastnikh, University of British Columbia.

Finn is my PhD fellow and will be my PhD intern starting in June. His PGo project translates a variant of PlusCal into Go. He wants to use trace-validation to check that the Go really matches the PlusCal; his trace-validator is called TraceLink. It uses log statements that are autogenerated along with the rest of the Go code, and it introspects the PlusCal to see what variables must be matched per trace line, and generates some TLA+ to validate a trace against the implementation. If validation fails, TraceLink provides some debug info, as TLA+ aliases that appear in TLC’s counterexample trace. This includes a list of enabled next-actions at the moment the trace diverged from the spec.

For each PlusCal label block (equivalent to a TLA+ action), PGo outputs critical section of Go code, which ends in commit/abort. If it commits, it updates the program’s state and logs the state transition for trace-checking’s sake. If it aborts it changes no state, but it still logs the state it observed, so even aborted critical sections can be trace-checked.

Finn’s system uses vector clocks to order log messages. This means there are many possible total orderings, and all of them should be valid behaviors according to the spec! (I keep forgetting and misunderstanding this.) A PGo-generated system should log all modeled variables on each state transition, and always increment the vector clock during every interaction between processes, so every one of the total orders is valid according to the spec. That gives TraceLink some options:

- check every possible log ordering against the spec

- check one arbitrarily-chosen ordering

- check a set of orderings that satisfies some coverage metric

Finn has chosen a hybrid: TraceLink arbitrarily chooses one ordering of the log and checks it against the spec, but for each log message it asserts that each possible next state is valid according to the spec, before it advances to a single next state and repeats. He says this gives him a nice compromise between performance and completeness.

TraceLink generates from the log a TLA+ spec, which validates that the trace conforms with the original PlusCal spec of the program. This trace-checking spec could be huge, but TraceLink compacts it by factoring out common “shapes” of validation and storing the values in a binary file that TLC can read.

Finn gets diverse traces from the implementation by adding an exponentially-distributed random sleep after each critical section.

This work is specific to implementations generated by PGo, could be adapted with some manual effort (add PGo-style instrumentation to existing implementation) or by building a PlusCal-to-another-language compiler that automatically adds this instrumentation.

Translating C to PlusCal for Model Checking of Safety Properties on Source Code #

Guillaume Di Fatta (presenting), Emmanuel Ohayon, and Amira Methni, Asterios Technologies.

Asterios is a defense contractor, they make a realtime microkernel for embedded systems. Guillaume and his colleagues wrote a tool that auto-translates the microkernel scheduler from C to PlusCal. The C is simple and self-contained, which helps. The translation progresses through a series of intermediate representations. He wrote a tiny stack-based VM in PlusCal to interpret the final IR. This is cool but outside my interests. An intriguing suggestion for future work: use model-checking to show the auto-translated PlusCal refines a more abstract spec.

TLA+ for All: Model Checking in a Python Notebook #

Konstantin Läufer (presenting) and George K. Thiruvathukal, Loyola University Chicago.

Konstantin talked about teaching formal methods to undergrads at his school and globally. He described where FM fits in a curriculum for CS students, particularly among other classes about software testing, which increase in power as they work through the curriculum. (He mentions the useful ZOMBIES mnemonic for unittesting!) He wants to integrate FM into existing classes, e.g. a data structures class could use Alloy or LEAN. Finally, he shows that he’s created a browser-based zero-install environment for running TLC.

Formal models for monotonic pipeline architectures #

J.-P. Bodeveix, A. Bonenfant, T. Carle, Mamoun Filali (presenting), C. Rochange.

They want to analyze the Worst-Case Execution Time (WCET) for pipelined hardware architectures. They’re concerned about “timing anomalies” e.g. where a cache hit makes execution slower. Mamoun didn’t discuss performance analysis in TLA+, though, he instead walked through a TLA+ spec and safety properties in detail.

TLA+ Modeling of MongoDB Transactions #

Murat Demirbas (presenting) and Will Schultz MongoDB

MongoDB introduced single-document transactions in version 3.2 (2015), multi-document transactions in version 4.0, (2018), and sharded transactions in version 4.2 (2019). Murat described the layers of the transaction algorithm: storage, replica set, sharded cluster. The algorithm is hard to reason about and verify: it was developed incrementally, its use of ClusterTime and speculative majority adds complexity, and some of the hard bugs are in fault-tolerance and the interactions of transactions with DDL and chunk migration between shards. For now, Murat’s and Will’s TLA+ spec disables chunk migration and ignores DDL.

To check isolation, our spec maintains a history variable “ops” where it stores the history of transactions. We use Crooks’s client-centric isolation definitions, modeled in TLA+ by Soethout. The interactions between transactions and timestamps are key to isolation guarantees.

Interesting audience question: Crooks’s formalism doesn’t catch phantoms due to predicate reads, how do we ensure we’re preventing phantoms? Murat: that’s only a problem with serializable isolation, but the strongest we provide is snapshot isolation.

We use model-based test case generation to check conformance of the storage layer spec and implementation. Since the spec is modular, the storage spec is separate from the other aspects of the transactions spec, which helps.

Murat and Will analyzed the permissiveness of our transaction protocols compared to the behaviors allowed by Crooks’s formalism. We allow 70-80% of the behaviors that are theoretically allowed for snapshot isolation and read-committed—this suggests if we were more permissive we could allow more parallelism. I have a draft of the paper that I haven’t read yet, now I’m really curious how they measured this.

Are We Serious About Using TLA+ For Statistical Properties? #

A. Jesse Jiryu Davis MongoDB

This was my presentation. Here’s the video and a detailed writeup. In summary: Marc Brooker in 2022 asked for tools that model performance and check safety, with a single spec. Markus Kuppe and Jack Vanlightly that year presented a way to do it in TLA+, but I think it’s an impractical prototype because it’s missing essential features. I asked, are we serious about making TLA+ do performance modeling? I reviewed some existing tools for ideas to inspire us: Java Modelling Tools, PRISM, Fizzbee, Runway. I proposed how we might do performance modeling in TLA+, and asked the audience whether we should proceed and how.

It’s never been easier to write TLA⁺ tooling! #

Andrew described the existing infrastructure on which you can build your own TLA+ tools. There are a bunch of TLA+ parsers, SANY is the only full-featured one. Andrew’s Tree-Sitter grammar is good for prototyping new TLA+ notation because it tells you how much ambiguity you’d introduce. For model-checking there’s TLC, Apalache, and now Will Schultz’s Spectacle can do some model-checking. There’s Snowcat (a type-checker), two auto-formatters (tlaplus-formatter and tlafmt), LSP (language server protocol), Andrew’s TLAUC (Unicode converter), and the TLA+ VS Code extension.

The main tools have a lot of legacy code, defined as “code without tests” or, according to Andrew, “code that no one understands.” SANY & TLC were started 25 years ago, overhauled 10 years ago for version 2, those authors are gone now. Markus knows more than anyone, but there are large sections of the code he doesn’t know / no one knows. The model-checking code is straightforward, but TLAPM (the proof system) is esoteric. TLAPM was started with a grant at INRIA but is not vigorously developed now. Apalache was spun off by Informal Systems but it hasn’t been adopted by the TLA+ Foundation; it’s quiescent.

Andrew wants a world where we’re unafraid to make large changes to core TLA+ tools. He’s written a lot of parser tests with his TLA+ Foundation funding, but we need more tests. We should onboard new core contributors: Andrew is writing onboarding docs and tutorials for contributors. He’s also written how to create a new TLA+ tool.

He presented some possible future directions, like simplifying and modernizing TLA+ syntax, and improving model-checking performance 1000x.

Announcements #

Markus says the TLA+ issue tracker in GitHub now labels some issues as TLA+ Foundation funding candidates. He describes the grant proposal process.

Markus demonstrated, with a sped-up video, that GitHub Copilot can solve the Diehard problem with TLA+ in 4 minutes of reasoning, with some human intervention. We should explore TLA+ plus LLMs more. The Foundation and NVidia came up with the “TLAI” challenge, for novel combos of TLA+ and AI.

We’ll have a TLA+ conference / community event next year, but we don’t yet know where or when.

Extracurriculars #

Andrew Helwer and I visited Niagara Falls the day after the conference. He’d never seen them. I saw the falls when I was a kid and hardly remember, aside from a memory of a helicopter ride I took over the falls with my mother. She’d said, “This is so expensive, promise you won’t blink for the whole ride.”

I give the falls 3 out of 5 stars: lots of water, but not very tall, and you can’t see much because of all the mist.

From the delightful “Subpar Parks”.

From the delightful “Subpar Parks”.

We had a great time in the vast and rusty old powerplant, a total steampunk playground, with a half-mile tunnel where you walk deep underground from the subterranean turbines to the base of the falls. There’s a hagiographic exhibit about Nikola Tesla, the wronged genius who single-handedly invented electricity, radios, and VTOL aircraft? The show turned out to be a propaganda operation by the Serbian government—Tesla was born in Serbia.

Andrew and I hiked around the Niagara Glen Nature Reserve, where we saw some lovely mossy boulders, and Canada goose families with floppy goslings.

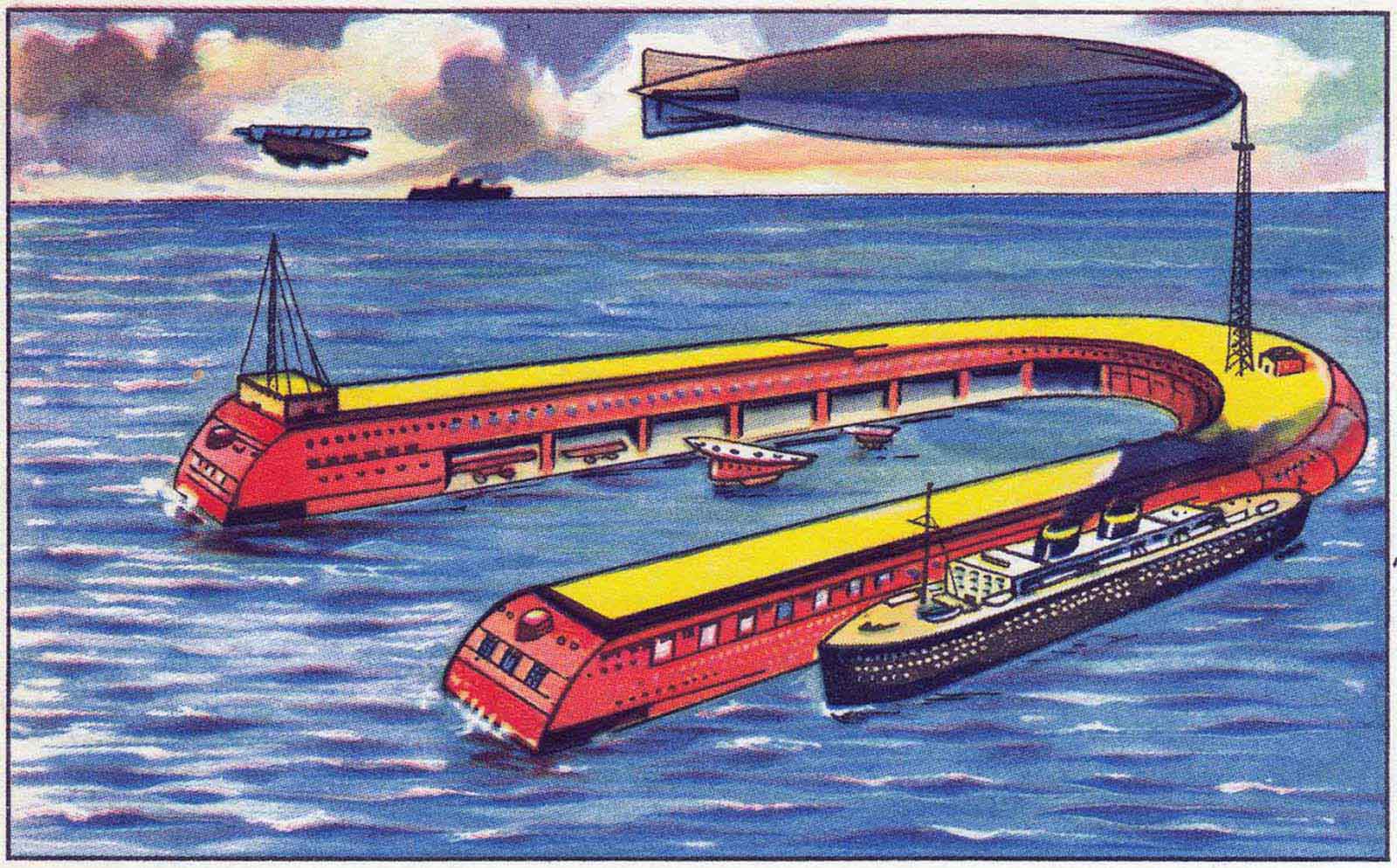

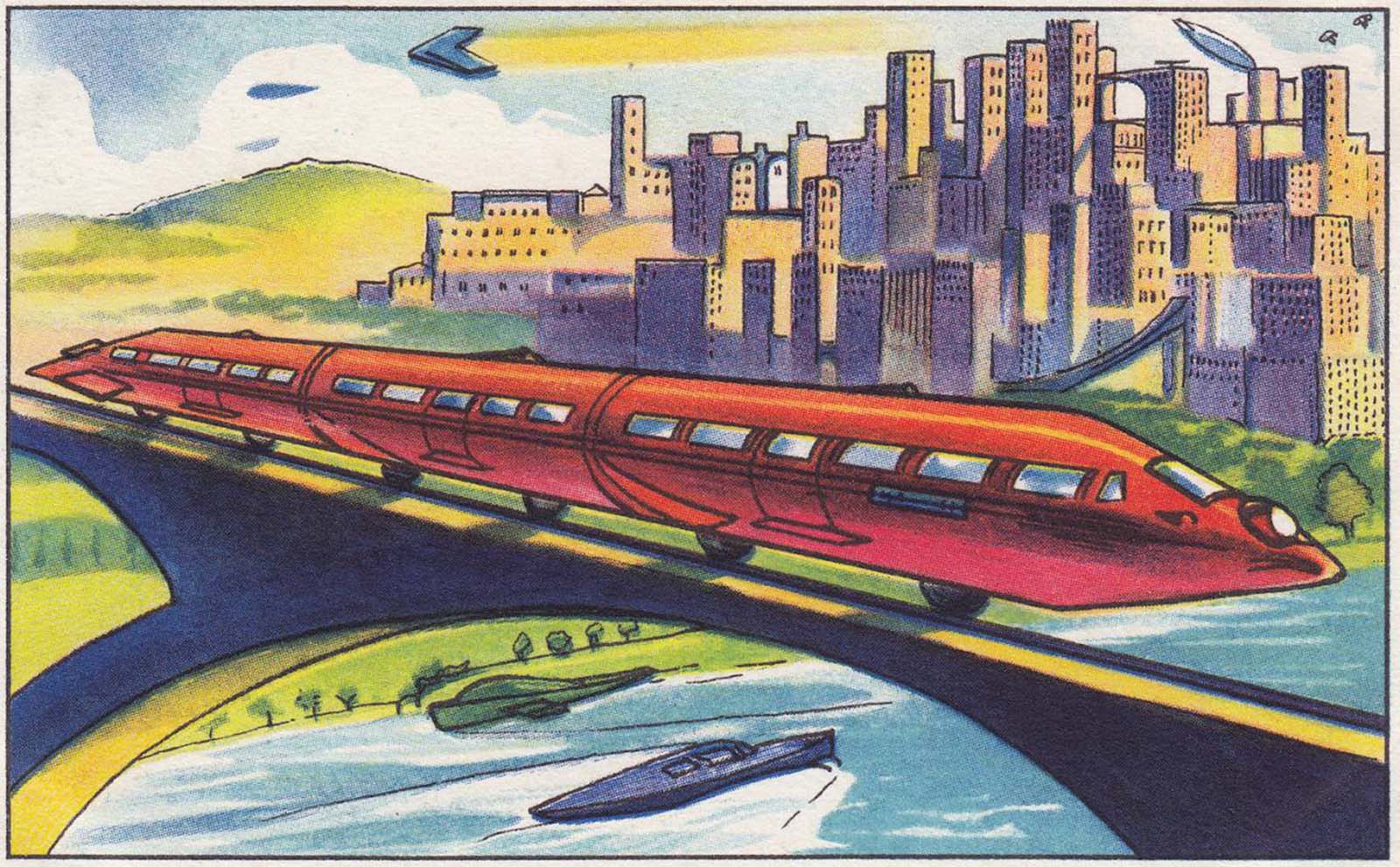

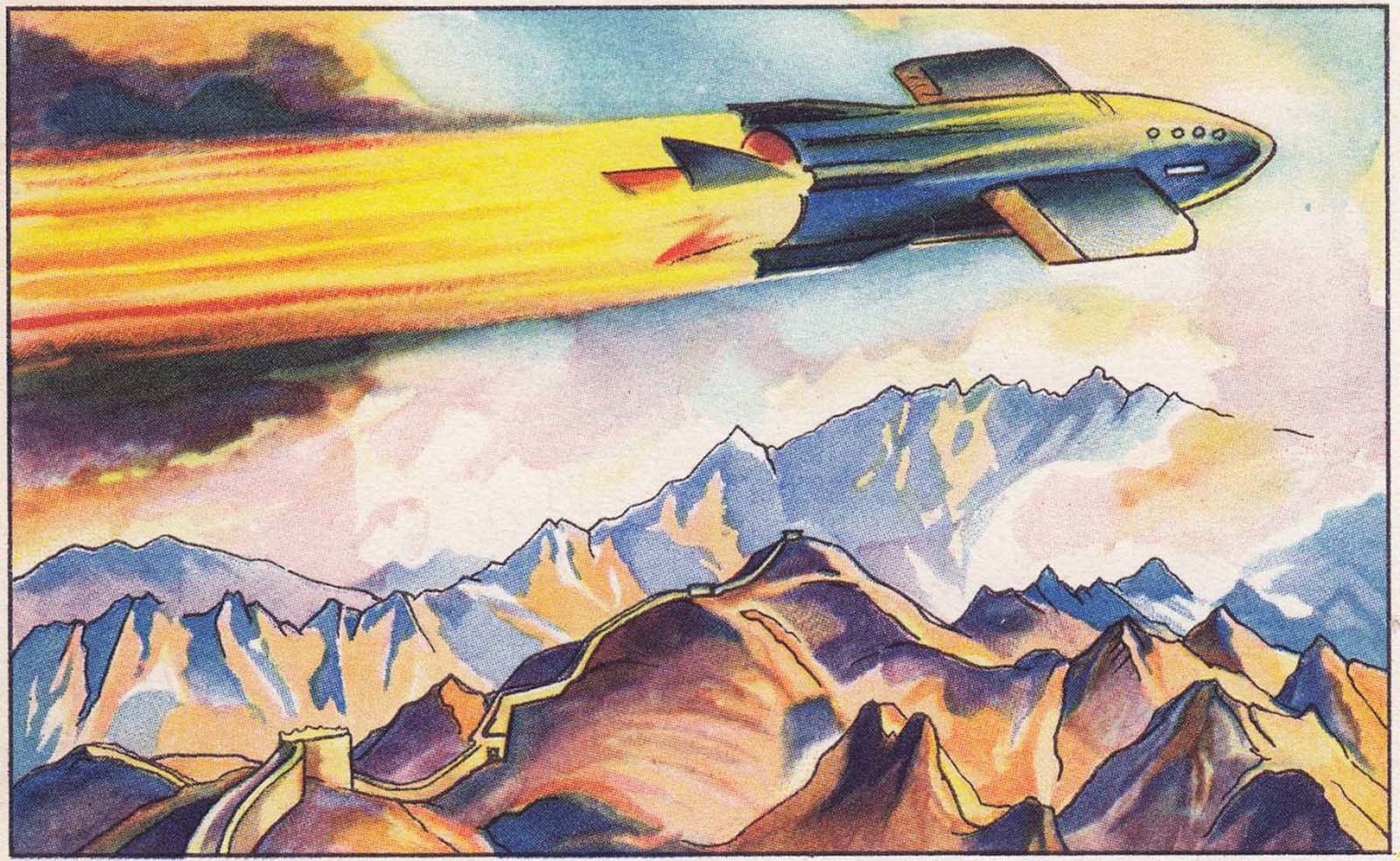

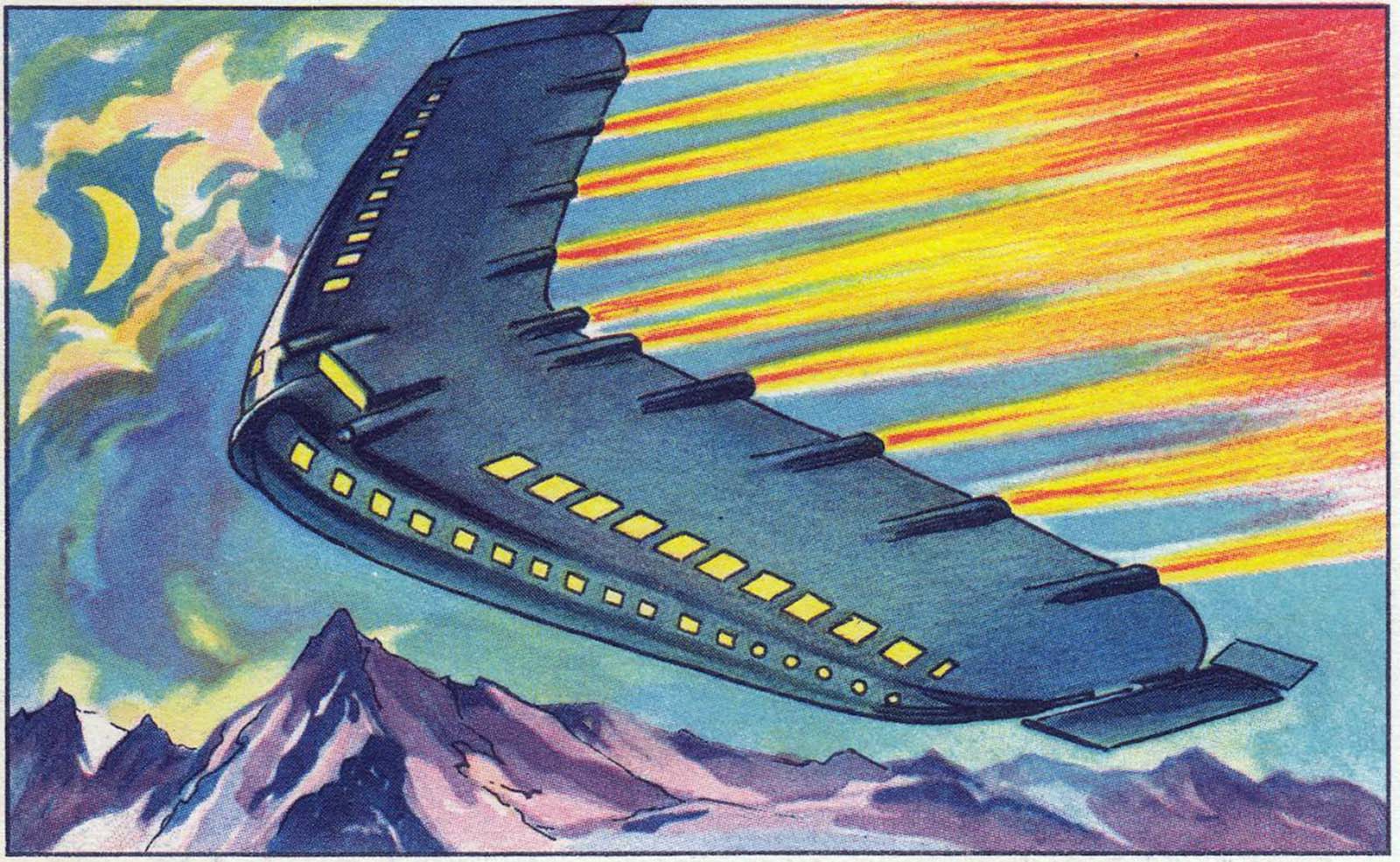

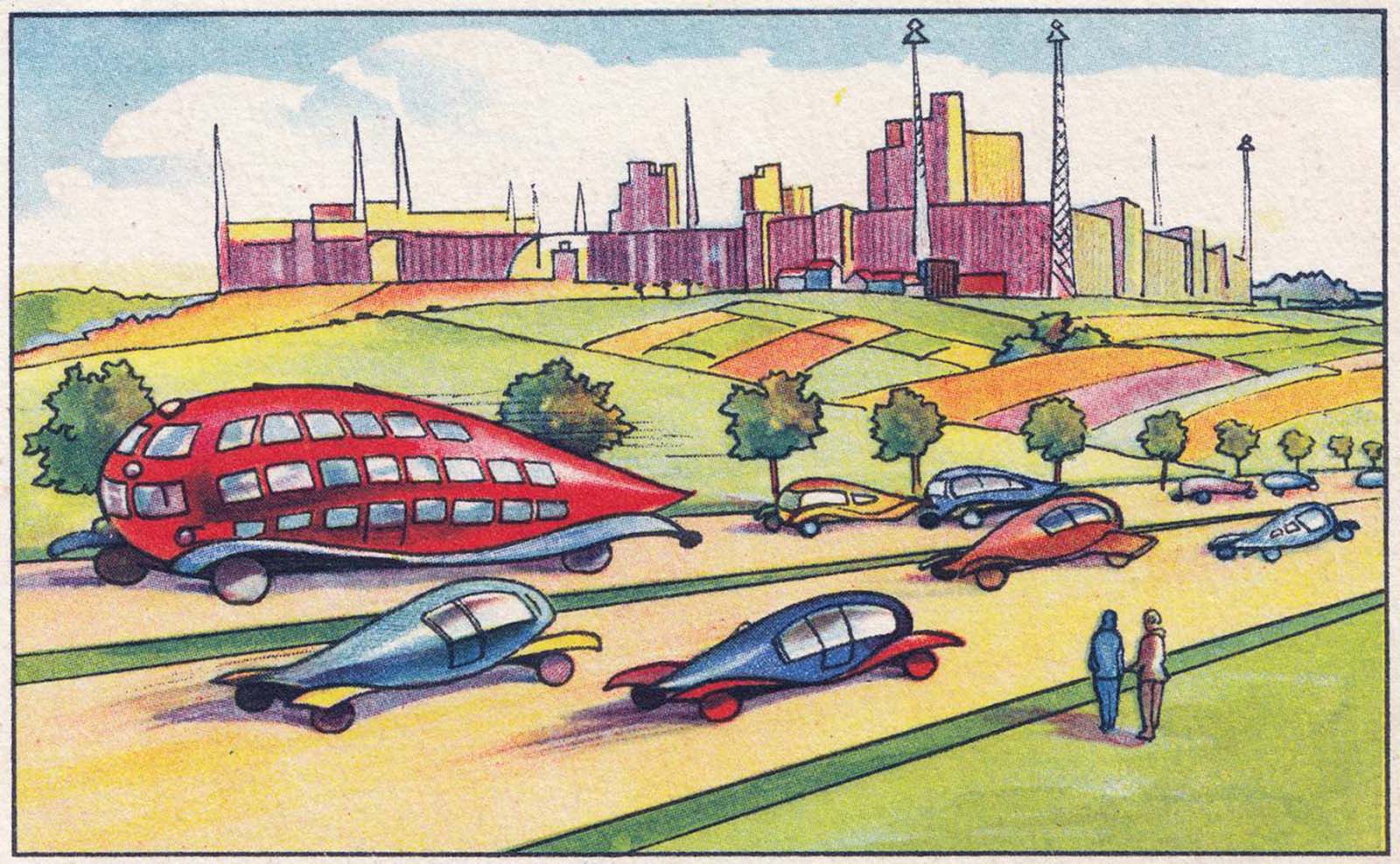

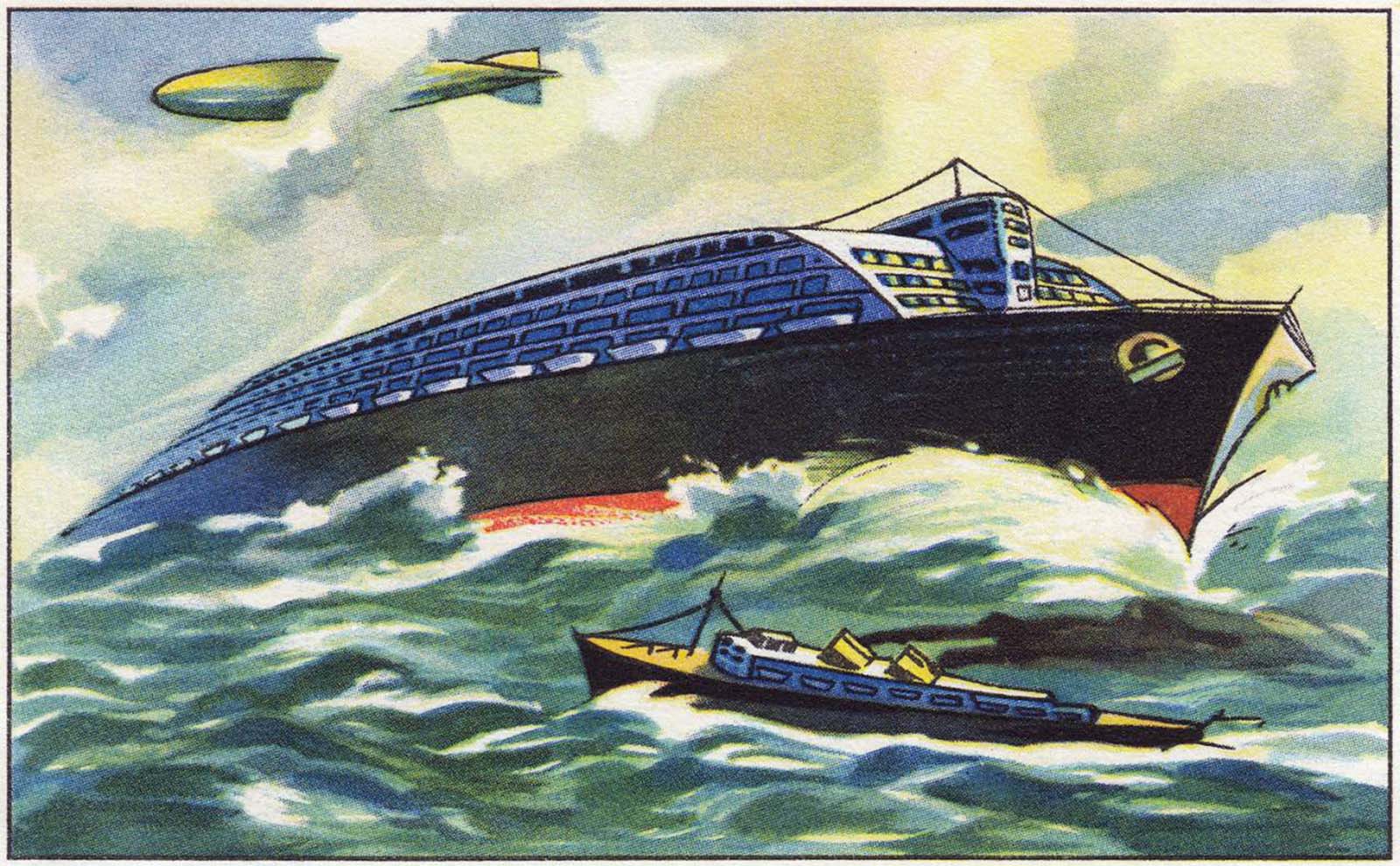

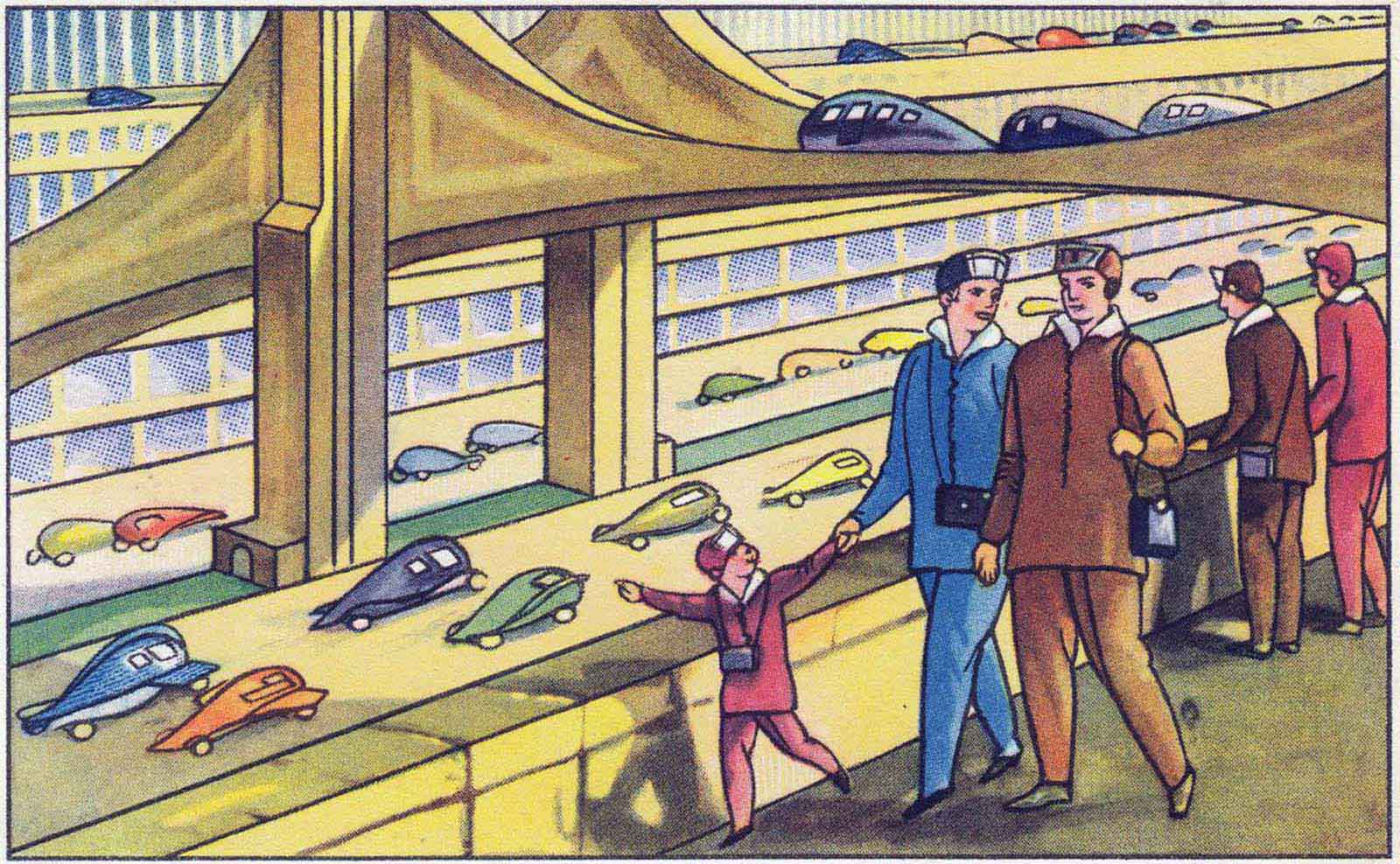

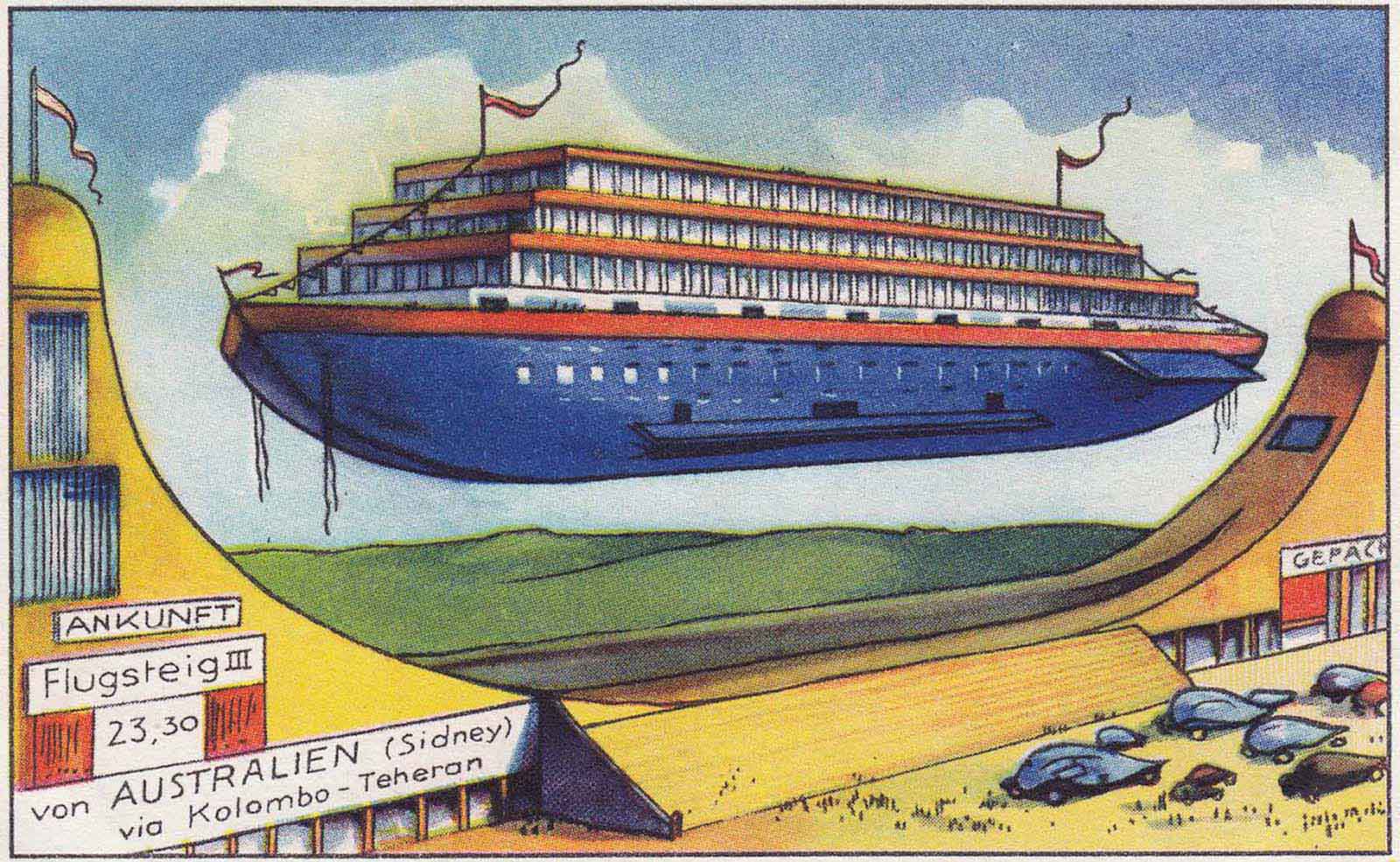

The futuristic images are from trading cards published by the Echte Wagner margarine company in early 20th Century Germany.